Article by Tim Feran, originally posted on Columbus Underground

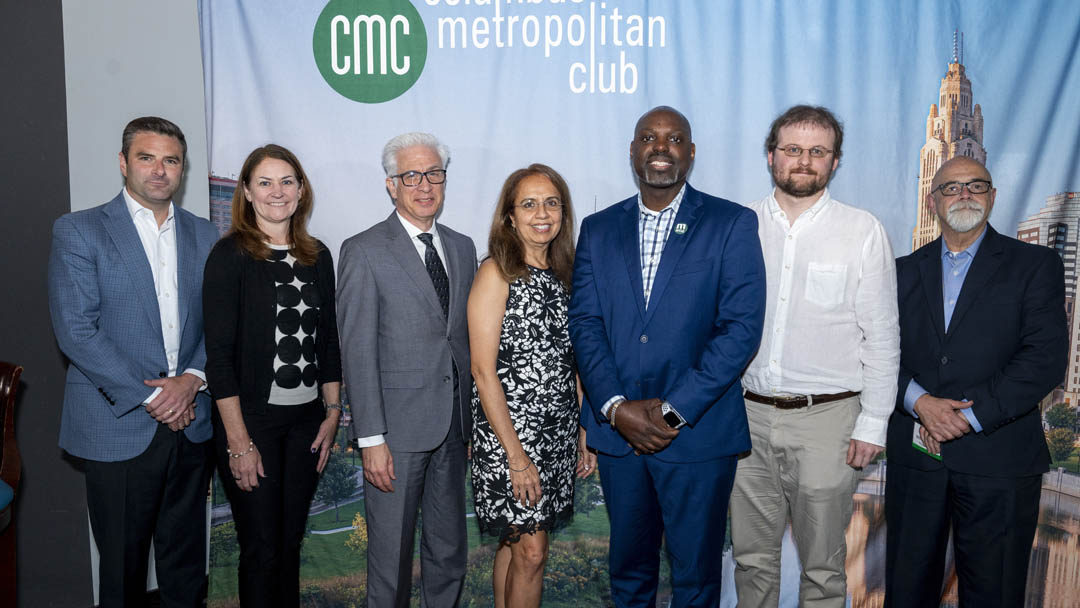

In an unusual move, the Columbus Metropolitan Club asked one of its panelists to come up with the title for its Wednesday, July 12, forum. While the request was unusual, it was entirely appropriate.

The panelist in question was ChatGPT, the artificial intelligence “chatbot.”

In the forms of a woman’s voice, and of text projected on a large screen, ChatGPT wasn’t the only panelist at the forum — titled “Exploring the Promise and Peril of Artificial Intelligence” — but it was the only non-human.

Trained on a diverse range of internet text and other data sources, ChatGPT has been designed to generate human-like responses and engage in virtual conversations with users. Its purpose is to assist and provide information on various topics.

More than a few audience members already enjoy interacting with the chatbot, as forum moderator David J. Staley found out after he asked the first question of the day.

“How many use ChatGPT?” said Staley, Associate Professor of History, Interim Director of the Humanities Institute, and Director of the Center for the Humanities in Practice at The Ohio State University.

When almost every hand in the room was raised, Staley remarked, “Wow.”

The technology’s temperamental nature was on display as Kevin Lloyd, Co-Founder and CEO of MYLE, and COO of ColorCoded Labs, worked to get ChatGPT to respond out loud to questions.

“This is what happens when you try to use technology in real time,” Lloyd said, chuckling.

Another panelist brought a bit more sober reality as well.

“It’s really not a conversation model,” said Andrew Perrault, Assistant Professor of Computer Science and Engineering at The Ohio State University. “It creates this kind of illusion.”

With ChatGPT up and running, Lloyd shared some of his enthusiasm for the technology. “As an entrepreneur, we actually do a lot of ChatGPT on a regular basis,” he said. “A lot of cases will validate your business model… in seconds.”

Staley, who writes a monthly column in Columbus Underground, clarified that while ChatGPT tries to complete a thought — much as the autocorrect function on a computer tries to complete the spelling of a word — “we’re not actually having a conversation. It’s a simulation of a conversation.”

Padma Sastry, Adjunct Professor at Franklin University, pondered the ethical aspects of ChatGPT, while acknowledging that she does use it.

“I use ChatGPT for generic questions,” she said, adding that, “often I see it give NOT accurate answers.” The data that has been fed into the chatbot must be “accurate and current,” Sastry cautioned.

She offered the example of a friend who went on a 600-mile bicycle trip and used GPS directions. All went well until the friend was confronted with a bridge that was broken. The GPS had no immediate solution other than to continue insisting that the bicyclist go over the bridge.

It was about that time in the forum when ChatGPT’s voice said that the chatbot, “may occasionally produce nonsensical answers.”

Lloyd echoed Sastry’s comment: “You have to realize it’s open-source information. Bad data in, bad data out.”

“You sometimes hear ChatGPT ‘hallucinating,’” Staley said.

Perrault agreed. “if you try to play chess against it, pretty soon it will produce moves that are impossible.”

“ChatGPT or any other model depends on how much work you put into it,” Sastry said. But at a certain point, after putting in a lot of information, “you really think about how much time you’re going to spend,” doing all that, and that maybe it’s easier to just do it yourself.

When Staley asked, “Is ChatGPT creative?” He answered his own question by noting, “I asked it to write poetry,” in the style of various poets. “I find (the poems) terrible.”

When an audience member questioned the panel about the dangers of chatbots, Perrault said that “it isn’t necessarily about sources, it’s the ability to flood the zone,” with inaccurate information. He pointed to a recent incident in which the stock market reacted in something close to panic after a fake image of an explosion at the Pentagon went viral.

While the panelists were mixed in their enthusiasm for ChatGPT, the topic was timely: the day after the discussion, news broke that the Federal Trade Commission is investigating OpenAI, the maker of ChatGPT, for possible violations of consumer protection law.

The FTC is seeking extensive records from OpenAI about its handling of personal data, its potential to give users inaccurate information and its “capacity to generate statements about real individuals that are false, misleading, or disparaging.